The focus of this blog is to provide an overview of serverless computing and the Knative platform, its features, and its benefits. The goal is to explain how Knative can help organizations build and deploy serverless applications more efficiently and effectively.

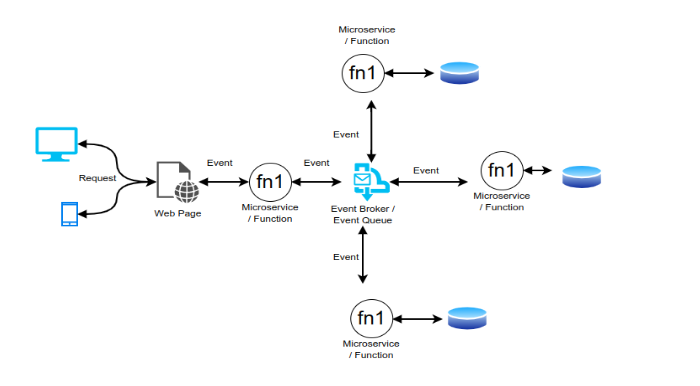

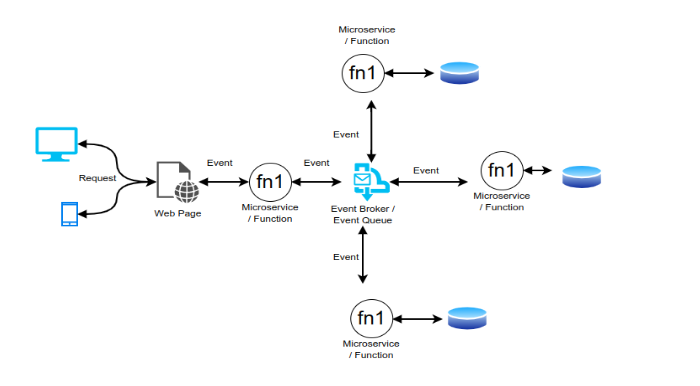

In the mid-2000s, there were a few developments that laid the foundation for what would eventually become serverless computing. One key development was the emergence of cloud computing, which made it possible to provision computing resources on-demand and at scale, without the need for on-premises infrastructure. Another important development was the rise of event-driven computing, which allowed developers to trigger code execution in response to events such as changes to a database, a new file appearing in a storage bucket, or a new message being sent to a queue. This event-driven approach enabled developers to build highly responsive and scalable applications that could scale automatically based on demand.

Sample Event Driven application

What is Serverless Computing?

Serverless computing allows organizations to focus on building and deploying their applications without having to worry about the underlying infrastructure. This can reduce the cost and complexity of managing and scaling applications and provide greater flexibility and scalability. Additionally, serverless computing can enable organizations to innovate faster and more efficiently, by allowing them to build and deploy new applications and features more quickly and at a lower cost. Furthermore, serverless computing can also improve the reliability and availability of applications, as the cloud provider typically manages the infrastructure and provides built-in availability and scalability features.

Serverless computing first emerged as a cloud computing model, in which the cloud provider manages the infrastructure and dynamically allocates machine resources to run users’ applications. This approach eliminates the need for users to provision or manage any servers. Instead, users are only charged for the precise amount of computing resources consumed by their application, which is typically measured in terms of invocations, execution time, and data processed.

With serverless platforms like Knative built on top of Kubernetes, the platform can be deployed anywhere, and the dependency on cloud infrastructure is eliminated. This means that any organization can benefit from serverless features, regardless of whether they move to the cloud. For example, an organization with a shared infrastructure team can deploy a serverless platform using Kubernetes and Knative on their data center VMs (Virtual Machine). Alternatively, they can build the platform on top of a cloud platform like AWS, Azure, or GCP, or use a managed Knative-based serverless platform in the cloud, such as Google Cloud Run or IBM Cloud Code Engine.

The first widely adopted serverless computing platform, AWS Lambda, was introduced by Amazon Web Services in 2014. Since then, other cloud providers such as Microsoft Azure, Google Cloud Platform, and IBM Cloud have also introduced their own serverless computing offerings, further expanding the capabilities and options available to developers.

One of the latest additions to the serverless ecosystem is Knative, an open-source project that provides a set of building blocks for deploying and managing serverless applications on Kubernetes. Knative abstracts away many of the complexities of deploying and managing serverless applications on Kubernetes, making it easier for developers to focus on writing code.

What is Knative?

Knative is a serverless application layer designed specifically for developers, and it works well alongside the existing Kubernetes application constructs. It is a CNCF (Cloud Native Computing Foundation) incubating project, which means it is backed by a community of experts and organizations committed to advancing cloud-native technologies.

Knative provides an essential set of components for building and running serverless applications on Kubernetes. Created with contributions from over 50 different companies, Knative includes features like scale-to-zero, autoscaling, in-cluster builds, and an eventing framework for cloud-native applications. Knative can be used on-premises, in the cloud, or in a third-party data center. Most importantly, Knative enables developers to focus on writing code, without needing to worry about the challenging and mundane aspects of deploying and managing their applications.

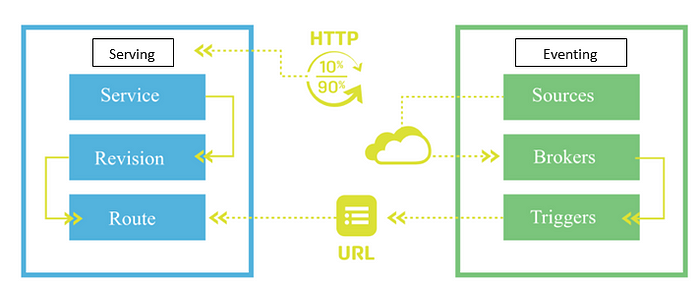

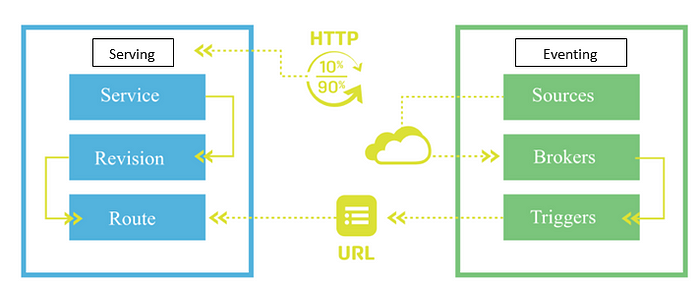

Knative is made up of two components: “Knative Serving,” which is a container runtime that can automatically scale based on HTTP requests, and “Knative Eventing,” which is a routing layer for Events asynchronously sent over HTTP.

*Reference: Knative home page

Knative Serving:-

Knative Serving is responsible for handling incoming requests and auto-scaling applications based on traffic. When a request is received, Knative Serving routes the request to the appropriate service, which then processes the request and returns a response. Knative Serving uses a routing mechanism to direct requests to the appropriate service based on the request URL or other criteria.

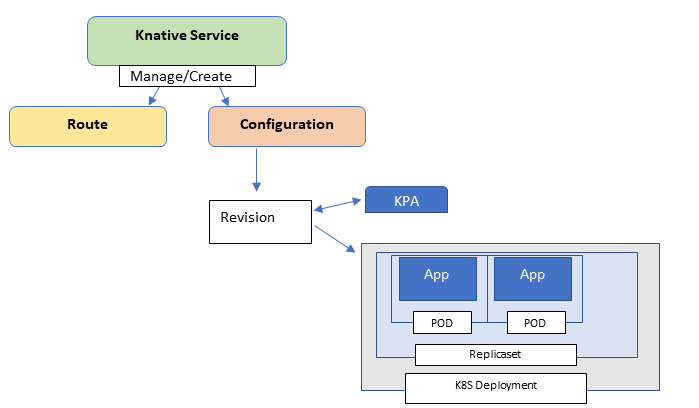

How does Knative Serving work behind the scene?

Knative Serving

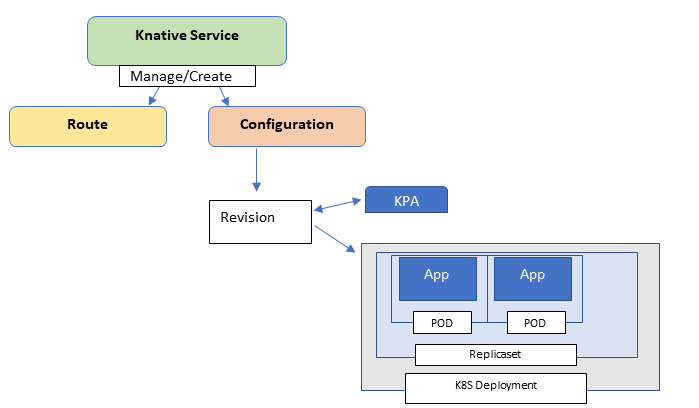

- The developer deploys a serverless workload on Knative Serving by creating a Kubernetes Custom Resource Definition (CRD) object.

- Knative Serving automatically creates and manages a set of resources, including Services, Routes, Configurations, and Revisions.

- The Service resource manages the entire lifecycle of the workload and creates other objects such as Routes and Configurations.

- Routes define the network endpoint that will be used to access the workload, and Configurations define the desired state of the workload deployment.

- When a Configuration is modified, it generates a new Revision, which represents a snapshot of the code and configuration at that specific time.

- Revisions are immutable objects that can be scaled up or down automatically based on incoming traffic. Knative Serving uses the Knative Pod Autoscaler (KPA), which is built on top of Kubernetes Horizontal Pod Autoscaler (HPA), to manage the scaling of Revisions based on the configured scaling rules and observed resource utilization, including request throughput and concurrency.

- KPA provides a more responsive and dynamic scaling experience by automatically scaling to zero when there is no incoming traffic, and quickly scaling up when there is a sudden increase in traffic.

- The Serverless Workload is accessed by sending requests to the network endpoint defined by the Route resource.

Knative Eventing:-

Knative Eventing is a set of APIs that enable an event-driven architecture for the applications. With these APIs, we can build components that route events from event producers to event consumers, known as sinks. Sinks can receive events or respond to HTTP requests by sending a response event.

The communication between event producers and sinks in Knative Eventing is based on standard HTTP POST requests. The events themselves follow the CloudEvents specifications, which allows for easy creation, parsing, sending, and receiving of events in any programming language.

Knative Eventing components are loosely coupled, which means that they can be developed and deployed independently of each other. This flexibility allows event producers to generate events before active event consumers are listening for them. Similarly, event consumers can express interest in a particular class of events before any producers are creating those events.

Use cases for Knative:-

Knative is a platform for building, deploying, and managing modern, cloud-native applications, and is commonly used for a variety of use cases, including:

- Web Applications: Knative can be used to build and deploy web applications, providing a scalable and flexible platform for delivering dynamic, interactive web experiences.

- Backend Services: Knative can be used to build and deploy backend services, such as APIs and data processing pipelines, providing a scalable and flexible platform for processing enormous amounts of data.

- Microservices: Knative can be used to build and deploy microservices, providing a scalable and flexible platform for building complex, highly distributed applications.

The benefits of using Knative in these use cases include:-

- Increased Agility: Knative provides a flexible and scalable platform for building, deploying, and managing modern, cloud-native applications, making it possible to quickly respond to changing business needs and bring new features and capabilities to market more quickly.

- Reduced Costs: Knative provides a cost-effective platform for building and deploying applications, by making it possible to allocate resources only when they are needed, reducing the cost of running your applications.

- Improved Scalability: Knative provides a scalable platform for building and deploying applications, making it possible to handle large numbers of users and enormous amounts of data, without provisioning additional resources.

Conclusion

Knative provides a powerful and flexible platform for building and deploying modern, cloud-native applications with the benefits of autoscaling and event-driven capabilities. By leveraging the power of Kubernetes and Kourier, Knative allows you to deploy and manage your serverless applications and functions with ease, all within a familiar and established environment. Whether you are looking to build an event-driven architecture or simply need a more efficient way to run your applications at scale, Knative can help.

In terms of the future of serverless computing, we will see continued growth and adoption in the coming years, as more organizations look for ways to reduce costs, increase agility, and improve scalability. Knative is well-positioned to play a key role in this future, by providing a flexible and scalable platform for building, deploying, and managing serverless applications.

Links

- https://knative.dev/docs/getting-started/

- About installing Knative — Knative

- https://tekton.dev/

FAQ

Q1, What is Knative Building?

Knative Building wasa component of the Knative platform that enables you to automatically build container images from source code. It provides a simple and flexible way to build, test, and deploy container images without having to manage complex build pipelines or infrastructure.

Knative Building has been deprecated in favor of Tekton Pipelines, and Tekton Pipelines is a standalone project not a component of Knative.

Tekton Pipelines is a powerful and flexible platform for building, testing, and deploying container images in a Kubernetes-native way. It provides a rich set of building blocks that can be combined to create complex CI/CD pipelines, and it supports a wide range of programming languages and software-build systems.

While Knative Building is still supported in some Knative distributions, users are encouraged to use Tekton Pipelines for building and deploying container images in a Kubernetes environment. Tekton Pipelines is also widely used in other Kubernetes platforms such as OpenShift and Google Cloud Build.

Q2, What are some managed KNative options?

There are a few managed Knative options available that provide a fully managed platform for building, deploying, and managing serverless workloads on Kubernetes. All the below options provide you a platform to deploy containerized applications and functions on a fully managed Knative environment and scale up or down based on demand

Google Cloud Run: Google Cloud Run is a fully managed Knative-based platform on Google Cloud Platform (GCP). Cloud Run also provides integration with other GCP services, such as Cloud Build and Cloud Logging.

Red Hat OpenShift Serverless: Red Hat OpenShift Serverless is a fully managed Knative-based platform on Red Hat OpenShift. OpenShift Serverless also provides integration with other Red Hat OpenShift services, such as Build and Tekton.

IBM Cloud Code Engine: IBM Cloud Code Engine is built on top of Knative and provides a simplified experience for deploying containerized workloads to a fully managed Knative environment. Code Engine also provides integration with other IBM Cloud services, such as IBM Cloud Object Storage and IBM Cloud Databases.

Q3, What are other serverless solutions work on top of Kubernetes?

Several serverless solutions work on top of Kubernetes. Some of the popular ones are:

Kubeless: Kubeless is a Kubernetes-native serverless framework that allows you to deploy small bits of code (functions) without having to worry about the underlying infrastructure. It supports several languages such as Python, NodeJS, and Go, and can be used to build event-driven applications.

OpenFaaS: OpenFaaS (Function as a Service) is a popular open source serverless framework that can be deployed on top of Kubernetes. It supports several programming languages such as Python, Node.js, and Go, and provides features such as auto-scaling, metrics, and a CLI for deploying and managing functions.

Fission: Fission is a Kubernetes-native serverless framework that allows you to deploy functions quickly and easily. It supports several programming languages such as Python, Node.js, and Go, and provides features such as auto-scaling, in-cluster builds, and a CLI for deploying and managing functions.

Kube9s: Kube9s is a Kubernetes-native serverless framework that provides an event-driven architecture for building applications. It supports several programming languages such as Python, Node.js, and Go, and provides features such as auto-scaling, event-driven workflows, and a CLI for deploying and managing functions.

KEDA: KEDA acts as a Kubernetes operator, allowing you to deploy it to your Kubernetes cluster and configure it to scale your workloads based on the number of incoming events. KEDA is designed to work with any event source that can emit messages in response to events, such as Kafka, RabbitMQ, Azure Service Bus, and more.

These are just a few examples of serverless solutions that work on top of Kubernetes. There are many other options available in the market, each with its own set of features and capabilities.

Q4, Can we use Knative with AWS Lambda or with Azure Functions?

AWS Lambda is a serverless computing service provided by Amazon Web Services (AWS). Similarly, Azure Functions is a serverless computing service provided by Microsoft Azure.

Knative is a platform that abstracts away the underlying infrastructure and provides a higher-level interface for developers to deploy their serverless workloads. Knative can work with any containerized workload, including AWS Lambda functions and Azure functions that have been packaged as Docker containers

To use AWS Lambda or Azure Functions with Knative, you need to package your Lambda function as a Docker container and deploy it to a Kubernetes cluster using Knative Serving. You can also use Knative Eventing to integrate your Lambda functions with external event sources.

AUTHOR

Dhanesh U.K, Senior DevOps Architect