A single NVIDIA H100 GPU can cost upwards of $40,000. Yet millions of creators, developers, and enterprises worldwide are accessing GPU power worth hundreds of thousands of dollars for less than the price of a premium coffee subscription. Welcome to the GPU-as-a-Service revolution.

The digital landscape has reached an inflection point where computational demands have outpaced traditional infrastructure capabilities. While Moore's Law continues its relentless march, the gap between what modern applications require and what organizations can feasibly deploy on-premises has never been wider. GPU-as-a-Service (GPUaaS) isn't just bridging this gap—it's fundamentally restructuring how we approach high-performance computing in gaming, media production, and content creation.

The Market Imperative: Numbers That Demand Attention

The global cloud gaming market alone is projected to reach $23.9 billion by 2030, growing at a CAGR of 45.8% from 2023. More telling is the enterprise adoption rate: 73% of organizations now utilize cloud-based rendering services, up from 31% just three years ago. These aren't vanity metrics—they represent a seismic shift in computational architecture that forward-thinking CTOs and engineering leaders cannot ignore.

Consider the economics: A high-end workstation equipped with multiple RTX 4090s represents a $15,000-20,000 capital expenditure with a 3-year depreciation cycle. The same computational power, accessed through GPUaaS, costs approximately $2-4 per hour—translating to break-even points measured in hundreds, not thousands, of hours of usage.

Cloud Gaming: The Infrastructure Behind Seamless Experiences

Technical Architecture and Performance Metrics

Modern cloud gaming platforms operate on a foundation of GPU clusters distributed across global edge locations. NVIDIA's GeForce NOW operates over 1,500 servers across 100+ locations, each equipped with RTX 4080 or RTX 4090-class hardware. The technical specifications reveal the scale:

- Latency Targets: Sub-40ms end-to-end latency for competitive gaming

- Bandwidth Requirements: 15-25 Mbps for 1080p60, 35-50 Mbps for 4K60

- GPU Utilization: Advanced containerization achieving 85-90% GPU utilization rates

- Concurrent Users: Individual GPU instances supporting 4-8 simultaneous 1080p streams through advanced scheduling

The containerization technology deserves particular attention. NVIDIA's Multi-Instance GPU (MIG) technology allows a single A100 to be partitioned into up to seven independent instances, each with dedicated memory and compute resources. This granular resource allocation enables providers to optimize cost-per-user while maintaining performance guarantees.

Business Impact and ROI Analysis

For enterprise decision-makers, the financial implications are compelling. Traditional gaming infrastructure requires significant upfront investment with uncertain utilization patterns. Cloud gaming platforms report average GPU utilization rates of 60-70% compared to typical enterprise on-premises utilization of 15-25%.

Cost Comparison Analysis:

- On-premises RTX 4090 setup: $2,000 initial cost + $200/month operational expenses

- Cloud gaming equivalent: $0 initial cost + $3-5/hour usage-based pricing

- Break-even point: 133-167 hours of annual usage

Video Rendering: Transforming Post-Production Economics

Computational Complexity and Scale

Modern video production pushes computational boundaries that would have been inconceivable a decade ago. 8K video editing requires sustained memory bandwidth exceeding 1.5 TB/s, while real-time ray tracing for film production demands computational throughput measured in hundreds of teraFLOPS.

The numbers illustrate the challenge:

- 4K Video Rendering: 2-4 RTX 4090s for real-time playback

- 8K Production: 8-16 high-end GPUs for complex timeline operations

- VFX Compositing: Up to 32+ GPUs for photorealistic rendering

- AI-Enhanced Workflows: Additional 50-100% GPU overhead for ML-accelerated effects

Cloud rendering services have evolved beyond simple compute provision to offer specialized, optimized environments. Adobe's Creative Cloud integration with AWS and Google Cloud provides pre-configured instances with CUDA-optimized Creative Suite installations, reducing setup time from hours to minutes.

Performance Benchmarks and Optimization

Recent benchmarks demonstrate the tangible advantages of cloud-based rendering:

DaVinci Resolve 18 Performance Comparison:

- Local RTX 4090: 47 minutes for 10-minute 4K timeline with color grading

- AWS g5.24xlarge (4x A10G): 12 minutes for identical timeline

- Cost differential: $127 local hardware utilization vs. $48 cloud instance time

The optimization extends beyond raw performance. Cloud providers implement automatic scaling that adjusts GPU allocation based on timeline complexity, ensuring consistent performance while minimizing costs during less intensive editing phases.

Content Creation: Democratizing High-Performance Tools

AI-Accelerated Workflows

The integration of AI in content creation workflows has transformed GPU requirements from linear to exponential. Stable Diffusion XL requires 24GB of VRAM for optimal performance, while large language models for script generation demand upwards of 80GB for inference optimization.

Cloud-based content creation platforms now offer:

- Instant Access to Latest Hardware: RTX 4090, A100, H100 availability without procurement cycles

- Specialized Software Stacks: Pre-configured environments for Blender, Maya, Unreal Engine

- Collaborative Workflows: Multi-user access to shared GPU resources with real-time synchronization

- Version Control Integration: Git-based asset management with automatic GPU provisioning

Economic Impact on Creative Industries

The democratization effect cannot be overstated. Independent content creators now access computational resources previously reserved for major studios:

Cost Accessibility Analysis:

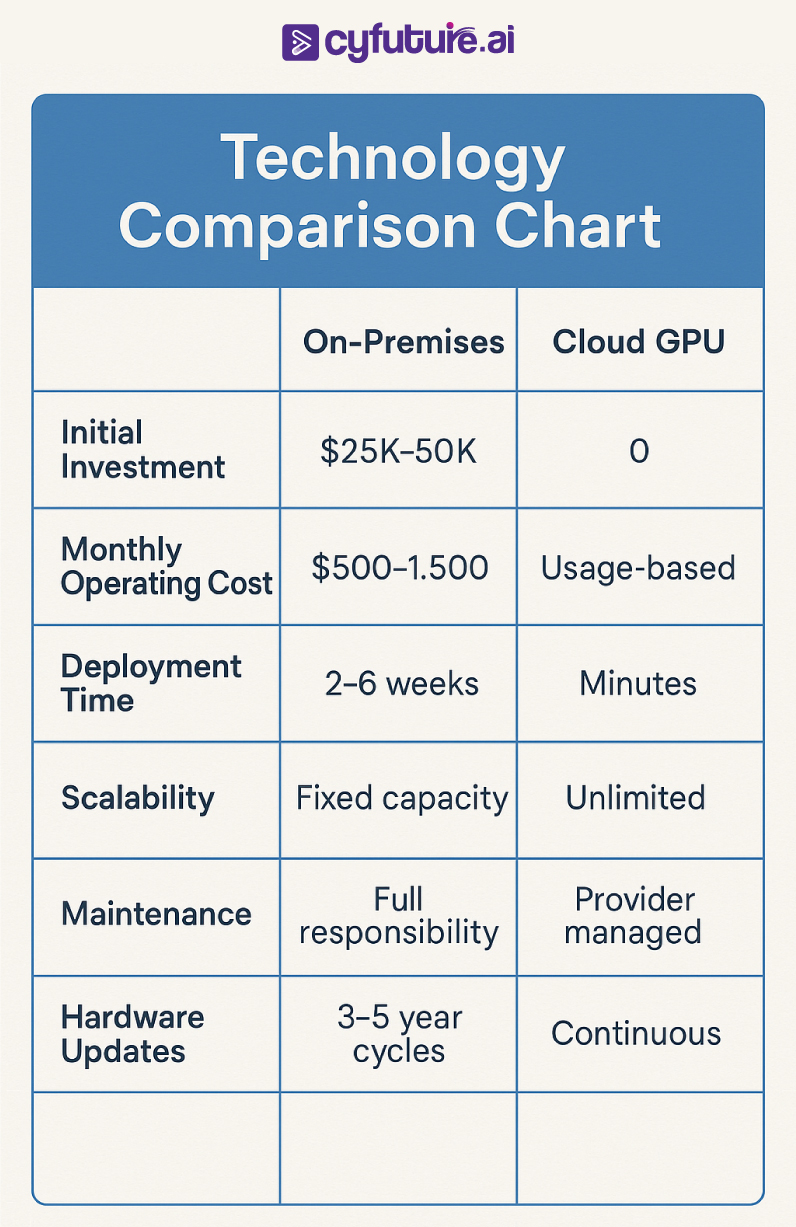

- Professional 3D workstation: $25,000-50,000 initial investment

- Equivalent cloud access: $5-15/hour for identical performance

- Monthly break-even for full-time creators: 200-300 hours

This accessibility has contributed to a 340% increase in independent 3D content production since 2020, according to industry analytics from Blender Foundation usage statistics.

Technical Implementation Considerations

Security and Compliance Architecture

Enterprise adoption requires robust security frameworks that address data sovereignty, intellectual property protection, and regulatory compliance. Leading GPUaaS providers implement:

Security Measures:

- End-to-end encryption with customer-managed keys

- Isolated compute environments with dedicated networking

- SOC 2 Type II, ISO 27001, and industry-specific certifications

- Zero-trust network architecture with microsegmentation

Compliance Frameworks:

- GDPR compliance for EU operations

- HIPAA compliance for healthcare content

- FedRAMP authorization for government contractors

- Industry-specific data residency requirements

Performance Optimization Strategies

Maximizing ROI requires strategic implementation approaches:

- Workload Profiling: Detailed analysis of GPU utilization patterns to optimize instance selection

- Auto-scaling Implementation: Dynamic resource allocation based on computational demand

- Cost Monitoring: Real-time usage tracking with automated budget controls

- Performance Benchmarking: Continuous optimization through A/B testing of instance configurations

Future Trajectory and Strategic Implications

Emerging Technologies Integration

The convergence of GPUaaS with emerging technologies presents unprecedented opportunities:

Neural Radiance Fields (NeRF): Real-time 3D scene reconstruction requiring 100+ TFLOPS Metaverse Development: Persistent virtual world hosting demanding 1000+ concurrent GPU streams Real-time Ray Tracing: Photorealistic rendering at interactive frame rates Quantum-Classical Hybrid Computing: GPU acceleration for quantum simulation algorithms

Market Evolution Predictions

Industry analysis suggests several transformative trends:

- Edge Computing Integration: Sub-10ms latency through distributed GPU clusters

- Specialized Silicon: Purpose-built chips for specific rendering and gaming workloads

- Sustainable Computing: Carbon-neutral cloud operations through renewable energy integration

- Federated GPU Networks: Peer-to-peer GPU sharing protocols for distributed rendering

Conclusion: The Computational Future is Distributed

The transition to GPU-as-a-Service represents more than technological evolution—it's a fundamental restructuring of how organizations approach computational resources. The financial metrics are compelling: 60-80% cost reductions for variable workloads, 90% faster deployment cycles, and access to hardware configurations that would require millions in capital expenditure.

For technology leaders, the question isn't whether to adopt GPUaaS, but how quickly strategic implementation can be achieved while maintaining competitive advantage. The organizations that master this transition will define the next decade of digital innovation, while those that hesitate risk computational obsolescence in an increasingly GPU-dependent technological landscape.

The $50 billion GPU revolution isn't coming—it's here. The only question remaining is whether your organization will lead or follow in this new computational paradigm.